Last week’s article described the difficulties faced by developers when working with distributed and/or microservice architectures, often lacking in-depth knowledge about the underlying network technologies that enable different components of the architectures to communicate.

Last week’s article described the difficulties faced by developers when working with distributed and/or microservice architectures, often lacking in-depth knowledge about the underlying network technologies that enable different components of the architectures to communicate.

As discussed, the main challenges relate to the way in which different services interact with each other rather than to the business logic of the software. This is where the infrastructure engineer comes in, making the two technologies work as one.

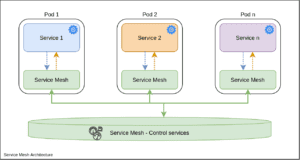

In a cloud-native ecosystem like Kubernetes, these challenges can be addressed by deploying a service mesh.

A service mesh is defined as a distributed system to address the microservice networking challenges in an integrated way. The complete system provides functions that complement Kubernetes. It is built on the foundation of a dedicated layer that controls all service interaction in the orchestrator. The layer is usually made up of network proxies deployed along with the service but without the service knowing.

The point of implementing a service mesh is to have an integrated platform that actively monitors all service traffic. A service mesh can address concerns related to fault tolerance, service security and application monitoring, and it provides a new non-intrusive way to implement communications efficiently. Compared to language-specific frameworks, a service mesh addresses the challenges outside of the services. The services are completely unaware of the fact that they are working with a service mesh.

As these systems are protocol-independent and work on layer 5 (session layer), they can be implemented in a polyglot environment. This abstraction enables developers to focus on their application business logic and it allows system engineers to operate infrastructure efficiently. So, it’s a win-win situation for both parties. Overall, a service mesh addresses concerns about these challenges and adds the following benefits:

● Traffic control

● Security

● Operation flow analysis

The microservices in a service mesh achieve this by forwarding tracing headers. This way, distributed tracing can help visualize interservice dependencies. A service mesh also captures metrics around request volumes and failure rates.

Another important aspect is service log handling. In a traditional application, there is often one log file recording everything. In a microservice architecture that has several log files, we need to look them all up to understand the behavior of a flow. A service mesh provides centralized logging and graphical dashboards built over logs.

Now, assuming the problems described by James Gosling do exist in distributed systems, how should we deal with them and how can a service mesh help?

● The network is NEITHER robust NOR reliable. As software engineers/architects, we need to be prepared to deal with these scenarios by introducing enough redundancy on the server side of the system, while retry mechanisms to handle transient network problems also need to be added on the client side.

● Latency is high. Patterns like “Circuit breaker” aim to manage latency scenarios by encapsulating calls to remote services in fault-tracking objects. This way, if there are any faults, the external service will no longer be invoked, and an error is instantly returned to the client. If these types of considerations are not taken into account, such failures can have a cascade effect over the whole system.

● Bandwidth is finite. To overcome the problem of increasing numbers of services, applications need a mechanism of quota allocation and consumption tracking. A growth in microservices can also lead to problems of saturation for load balancers and thus degrade performance. Using client-side load balancing is generally a good approach for dealing with similar situations.

● The network is NOT secure. In a microservice architecture, the responsibility for security lies with each development team. Inter-microservice communication needs to be controlled. Service-level authentication is essential in order to filter unknown connections.

● The topology changes. As mentioned, scaling a service horizontally can alter traffic flows and introduce unexpected failures. These problems can mostly be solved with a service discovery system.

● There are several systems administrators. Role-based RBAC is required to succeed here.

● Transport cost is NOT zero. Data transmission may also incur costs. The data must be serialized between endpoints. Protocols like SOAP/XML are highly inefficient and JSON is generally thought to be the best choice. Binary protocols such as language-agnostic protocol buffers have helped to improve JSON. Given that the information exchange takes place between applications, using a binary protocol for the whole ecosystem is preferable.

● The network is heterogeneous. A service mesh lets you decouple the infrastructure from the application code. It also simplifies the underlying network topology because the network is only providing the physical connection. All firewalls, load balancers and subnets can be removed as they don’t want to control any service interaction.

In summary, distributed components must be connected, managed and secured in order to create robust, powerful applications. By introducing a service mesh into your infrastructure, you can offload service discovery and load balancing, increase your adaptability, observability and security, and ultimately focus on creating better business logic.