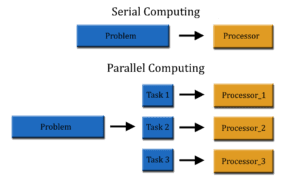

Parallel computing is a form of computation in which two or more processors are used to solve a problem or task. The technique is based on the principle that some tasks can be split into smaller parts and solved simultaneously.

Parallel computing is a form of computation in which two or more processors are used to solve a problem or task. The technique is based on the principle that some tasks can be split into smaller parts and solved simultaneously.

Parallel computing has become the dominant paradigm when it comes to manufacturing processors, thus making it essential to know not only the current applications of this form of computing, but also its future importance.

Figure 1 Serial versus parallel computing

A little history…

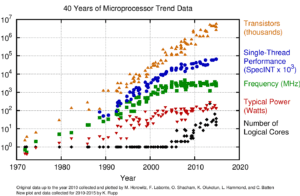

In the past, increasing the frequency of a processor was the prevailing reason for improvements in computer performance, at least until 2004. By increasing the processor’s clock frequency, execution time for each problem is reduced. However, a frequency increase has a negative effect on energy consumption. CPU power consumption is given by the following equation:

Where C is the capacitance change, V is the voltage and f is the processor frequency. By increasing the processor frequency, you proportionally increase energy consumption.

It’s mainly for this reason that parallel computing became established as the dominant paradigm. This is evidenced by the fact that most computers today (from supercomputers to personal computers) are multicore. Even some smartphones already have up to 8 cores (processors).

Given the current trend, it is easy to predict that future processors will continue to be multicore with a host of processing elements that never cease to grow; especially when you consider that having a high processing capacity is becoming increasingly necessary.

Figure 2 Evolution of microprocessors

The above image clearly shows the turning point that occurred around the year 2004. It also shows how processor frequency and consumption have remained constant in recent years while the number of processing elements keeps on rising.

Types of parallelism

There are essentially three types of parallelism:

- Bit-level parallelism: referring to the size of the data the processor can work with. For example, a processor with a 32-bit word size can perform 4 independent 1-byte additions simultaneously. If the processor size is 1 byte, it would need to perform 4 operations.

- Instruction-level parallelism: a program’s instructions are reordered and grouped for parallel execution. Modern processors include structures known as pipelines that allow instruction execution to be divided into segments. This enables different stages of multiple instructions to be executed at the same time.

- Task-level parallelism: a specific problem can be broken down into smaller tasks and solved concurrently by different process elements (processors, threads, etc.).

The importance of parallel computing

Lastly, parallel computing is a fundamental technique in scientific research, especially in the field of simulations where complex calculations and operations requiring a lot of processing capacity are carried out. It also has applications in creating different kinds of models (mathematical, statistical, climatic) and even in medical images.

Other relevant examples would be real-time systems, artificial intelligence, graphics processing or servers. In these latter, multicore processors are ideal because they allow many users to connect to the same service simultaneously (for example, in the case of a web server).

At Teldat, we always try to take full advantage of the hardware features available in our devices to provide maximum performance at all times.