Since the advent of computers a large variety of processors with different instruction set architecture (ISA) have been developed. Their design commenced with the definition of the instruction set and culminated in the implementation of the microarchitecture that complied with the specification. During the eighties interest was centered on determining the most desirable features of that set. In the nineties, however, focus shifted to the development of innovative microarchitecture techniques that were applicable to all of the instruction sets.

Since the advent of computers a large variety of processors with different instruction set architecture (ISA) have been developed. Their design commenced with the definition of the instruction set and culminated in the implementation of the microarchitecture that complied with the specification. During the eighties interest was centered on determining the most desirable features of that set. In the nineties, however, focus shifted to the development of innovative microarchitecture techniques that were applicable to all of the instruction sets.

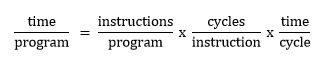

The main objective of each new generation of processors has been increased performance over the previous generation and, in recent times, with the additional consideration of a power consumption reduction. But how can performance be measured? Basically in terms of how long it takes to execute a particular program. With a series of very simple manipulations we can express performance on the basis of a number of factors with a precise meaning.

The first term to the right of the equals sign indicates the total number of dynamic instructions that need to be executed by the program, the second, how many machine cycles per instruction (CPI) are consumed on average and the third, the machine cycle time or the inverse of the clock frequency. Performance can be improved by acting on each of the three terms. Unfortunately though they are not always independent and the reduction of one of them can potentially increase the size of the others.

One of the simplest techniques for acting on the second and third terms with a small hardware cost is pipelining. The system or unit is partitioned in multiple stages by means of buffers in a way that splits the process into K subprocesses which run in each of the K stages. For a system that operates on one task at a time, the throughput is equal to 1/D, where D is the latency of a task or the delay associated with its execution by the system; pipelining effectively partitions the instruction processing into multiple stages with a new task begun in each subunit when the previous task leaves it, which occurs every D/K units of time. Processors that get a CPI equal to one using this technique are called scalar and their representatives are the RISC processors designed in the eighties. This same parameter is used to define superscalar processors such as those capable of less than one CPI. The average value of its inverse, IPC (instructions per cycle), is a good measure of the effectiveness of the microarchitecture. The major hurdle for pipelined processors, whether superscalar or not, are the pipeline hazards which make it necessary to stall the execution of the affected stage and subsequent stages that contain the instructions which come next in the program order. Three types are identified: structural hazards arise from resource conflicts when the hardware cannot support some combination of instructions at the same time, control hazards, when a program executes a jump instruction that branches to a destination that is different than predicted, and data hazards, which occur when instructions access data that depends on the result of a previous instruction that is not yet available; This latter type are sorted according to the order of occurrence in the program of the i and j instructions, with i occurring before j, which must be preserved in the pipeline. The varieties are: Read After Write (RAW), when j tries to read an operand before i writes to it, Write after Write (WAW), when j tries to write an operand before it is written by i, and Write After Read (WAR), when j tries to write an operand before it is read by i. The latter two types being false, or name, dependencies, which occur as a result of the limited number of registers defined in the architecture.

How do we manage the dependencies that arise from pipelining and which are complicated further in superscalar processors with multiple units operating simultaneously? Well basically by introducing a series of control structures that are read and updated as the instruction advances through the different stages. For the purposes of our discussion these stages are:

Fetch, Dispatch (IQ), Issue, {eXecute#1 II … II eXecute#N}, Writeback, Commit

The process is more or less as follows: the control unit fetches an instruction every clock cycle and passes it on, either in the next cycle if it comes from I-cache or a few cycles later if it comes from RAM, to the following stage, Dispatch, where four actions are carried out: (1) a physical register, not visible in the programming model, is selected from the free list (FL), (2) the temporary association between that register and the architectural register is written in the Rename Table (RAT) thus eliminating false dependencies (WAW and WAR) by dynamically assigning different names to a single architectural register in an attempt to keep track of the value of the result, not the name of the register, which is possible because there are more physical registers than defined by the architecture, (3) an entry is reserved in the Reorder Buffer (ROB) which is a circular queue that holds the status of physical registers associated with in flight instructions, (4) the instruction is placed in the Issue Queue (IQ) until the operands become available at which point, in the next clock cycle, it is issued to the execution unit if the Scoreboard (SB) determines that the operation can proceed without hazards; if this is the case, the operation starts a cycle later in the execution unit (eXecute#K) which is associated in the SB with the physical register that will hold the result and where the progress is updated every clock cycle. When this is finished the execution unit updates the physical register at the Writeback stage one or several cycles later. This happens at the same time as the status of the entry associated with the register in the ROB is updated to “completed” and the status of the operation in SB is also updated to Writeback; if the instruction in Writeback state is a store instruction the content of the architectural register is temporarily transferred to an intermediate structure called Finished Store Buffer (FSB) to hold the data in case any prior program instruction, still underway in any of the execution units, were to generate an exception (supposed a precise exception model). The instruction is commited in the next stage of the pipeline, one or several cycles later, when the ROB head pointer points to the instruction in the circular queue. The physical register is then transferred to the architectural one updating the status of the machine, the association between both physical and architectural registers held by RAT is freed, the physical register is returned to the pool in FL and, in the case of a write, the FSB content is transferred to the memory, whether D-cache or RAM.

Most processors used in TELDAT routers belong to Freescale’s PowerPC family, of course incorporate all the techniques described above and are superscalar; when an instruction is dispatched to one of the Issue Queues (IQ) (the queue in our example was centralized and in this architecture it is distributed with a queue for each unit) a rename register (RR), equivalent to the physical register, is assigned to the result of the operation and an entry is assigned in the Completion Queue (CQ) that performs ROB functions. At the end of the issue stage, instructions and their operands, if available, are latched into the execution unit reservation stations (RS). The execution completes updating the RR and its status in the CQ. Completed instructions are removed from the CQ in program order and the results are transferred from the RR to the architecture-defined registers a cycle later.

In this article we have tried to show, without going into justifications, the structures that appeared alongside the first RISC, back in the eighties, and their motivations; the pipelining concept, the hazards, real and avoidable, the register renaming technique, etc. Ideas stemming from the algorithm developed by Robert Tomasulo at IBM in 1967 and first implemented in the IBM System/360 Model 91 floating point unit, ideas that laid the foundation for the out-of-order execution found in today’s processors. I hope that the concise nature of this article has, if nothing else, aroused the curiosity of the kind reader.